A Commonplace Book

From Steven Johnson’s Where Good Ideas Come From: The Natural History of Innovation:

Darwin’s notebooks lie at the tail end of a long and fruitful tradition that peaked in Enlightenment-era Europe, particularly in England: the practice of maintaining a “commonplace” book. Scholars, amateur scientists, aspiring men of letters—just about anyone with intellectual ambition in the seventeenth and eighteenth centuries was likely to keep a commonplace book. The great minds of the period—Milton, Bacon, Locke—were zealous believers in the memory-enhancing powers of the commonplace book. In its most customary form, “commonplacing,” as it was called, involved transcribing interesting or inspirational passages from one’s reading, assembling a personalized encyclopedia of quotations. There is a distinct self-help quality to the early descriptions of commonplacing’s virtues: maintaining the books enabled one to “lay up a fund of knowledge, from which we may at all times select what is useful in the several pursuits of life.”

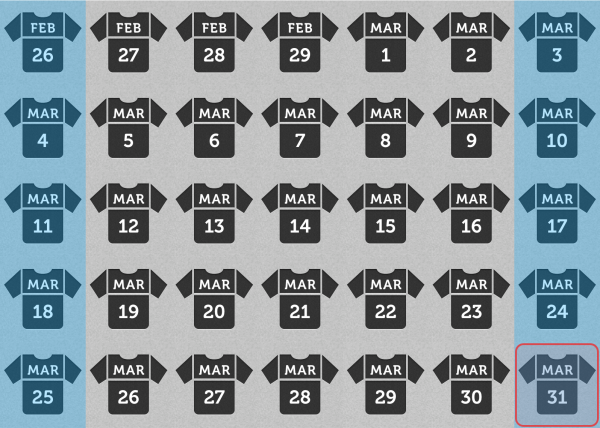

John Locke first began maintaining a commonplace book in 1652, during his first year at Oxford. Over the next decade he developed and refined an elaborate system for indexing the book’s content. Locke thought his method important enough that he appended it to a printing of his canonical work, An Essay Concerning Human Understanding. Locke’s approach seems almost comical in its intricacy, but it was a response to a specific set of design constraints: creating a functional index in only two pages that could be expanded as the commonplace book accumulated more quotes and observations:

When I meet with any thing, that I think fit to put into my common-place-book, I first find a proper head. Suppose for example that the head be EPISTOLA, I look unto the index for the first letter and the following vowel which in this instance are E. i. if in the space marked E. i. there is any number that directs me to the page designed for words that begin with an E and whose first vowel after the initial letter is I, I must then write under the word Epistola in that page what I have to remark.

Locke’s method proved so popular that a century later, an enterprising publisher named John Bell printed a notebook entitled “Bell’s Common-Place Book, Formed generally upon the Principles Recommended and Practised by Mr Locke.” The book included eight pages of instructions on Locke’s indexing method, a system which not only made it easier to find passages, but also served the higher purpose of “facilitat[ing] reflexive thought.” Bell’s volume would be the basis for one of the most famous commonplace books of the late eighteenth century, maintained from 1776 to 1787 by Erasmus Darwin, Charles’s grandfather. At the very end of his life, while working on a biography of his grandfather, Charles obtained what he called “the great book” from his cousin Reginald. In the biography, the younger Darwin captures the book’s marvelous diversity: “There are schemes and sketches for an improved lamp, like our present moderators; candlesticks with telescope stands so as to be raised at pleasure to any required height; a manifold writer; a knitting loom for stockings; a weighing machine; a surveying machine; a flying bird, with an ingenious escapement for the movement of the wings, and he suggests gunpowder or compressed air as the motive power.”

The tradition of the commonplace book contains a central tension between order and chaos, between the desire for methodical arrangement, and the desire for surprising new links of association. For some Enlightenment-era advocates, the systematic indexing of the commonplace book became an aspirational metaphor for one’s own mental life. The dissenting preacher John Mason wrote in 1745:

Think it not enough to furnish this Store-house of the Mind with good Thoughts, but lay them up there in Order, digested or ranged under proper Subjects or Classes. That whatever Subject you have Occasion to think or talk upon you may have recourse immediately to a good Thought, which you heretofore laid up there under that Subject. So that the very Mention of the Subject may bring the Thought to hand; by which means you will carry a regular Common Place-Book in your Memory.

Others, including Priestley and both Darwins, used their commonplace books as a repository for a vast miscellany of hunches. The historian Robert Darnton describes this tangled mix of writing and reading:

Unlike modern readers, who follow the flow of a narrative from beginning to end, early modern Englishmen read in fits and starts and jumped from book to book. They broke texts into fragments and assembled them into new patterns by transcribing them in different sections of their notebooks. Then they reread the copies and rearranged the patterns while adding more excerpts. Reading and writing were therefore inseparable activities. They belonged to a continuous effort to make sense of things, for the world was full of signs: you could read your way through it; and by keeping an account of your readings, you made a book of your own, one stamped with your personality.

Each rereading of the commonplace book becomes a new kind of revelation. You see the evolutionary paths of all your past hunches: the ones that turned out to be red herrings; the ones that turned out to be too obvious to write; even the ones that turned into entire books. But each encounter holds the promise that some longforgotten hunch will connect in a new way with some emerging obsession. The beauty of Locke’s scheme was that it provided just enough order to find snippets when you were looking for them, but at the same time it allowed the main body of the commonplace book to have its own unruly, unplanned meanderings. Imposing too much order runs the risk of orphaning a promising hunch in a larger project that has died, and it makes it difficult for those ideas to mingle and breed when you revisit them. You need a system for capturing hunches, but not necessarily categorizing them, because categories can build barriers between disparate ideas, restrict them to their own conceptual islands. This is one way in which the human history of innovation deviates from the natural history. New ideas do not thrive on archipelagos.