Amperlamb

Amperlamb

He thinks I’m working on parts. I’m working on concepts.

The following quote is from Zen and the Art of Motorcycle Maintenance by Robert Pirsig. (The John mentioned is the protagonist’s buddy who wants to escape modern technological life via a motorcycle he deigns to tune-up):

Precision instruments are designed to achieve an idea, dimensional precision, whose perfection is impossible. There is no perfectly shaped part of the motorcycle and never will be, but when you come as close as these instruments take you, remarkable things happen, and you go flying across the countryside under a power that would be called magic if it were not so completely rational in every way. It’s the understanding of this rational intellectual idea that’s fundamental. John looks at the motorcycle and he sees steel in various shapesand has negative feelings about these steel shapes and turns off the whole thing. I look at the shapes of the steel now and I see ideas. He thinks I’m working on parts. I’m working on concepts.

I was talking about these concepts yesterday when I said that a motorcycle can be divided according to its components and according to its functions. When I said that suddenly I created a set of boxes with the following arrangement:

And when I said the components may be subdivided into a power assembly and a running assembly, suddenly appear some more little boxes:

And you see that every time I made a further division, up came more boxes based on these divisions until I had a huge pyramid of boxes. Finally you see that while I was splitting the cycle up into finer and finer pieces, I was also building a structure.

This structure of concepts is formally called a hierarchy and since ancient times has been a basic structure for all Western knowledge. Kingdoms, empires, churches, armies have all been structured into hierarchies. Modern businesses are so structured. Tables of contents of reference material are so structured, mechanical assemblies, computer software, all scientific and technical knowledge is so structured—so much so that in some fields such as biology, the hierarchy of kingdom-phylum-class-order-family-genus-species is almost an icon.

The box “motorcycle” contains the boxes “components” and “functions.” The box “components” contains the boxes “power assembly” and “running assembly,” and so on. There are many other kinds of structures produced by other operators such as “causes” which produce long chain structures of the form, “A causes B which causes C which causes D,” and so on. A functional description of the motorcycle uses this structure. The operator’s “exists,” “equals,” and “implies” produce still other structures. These structures are normally interrelated in patterns and paths so complex and so enormous no one person can understand more than a small part of them in his lifetime. The overall name of these interrelated structures, the genus of which the hierarchy of containment and structure of causation are just species, is system. The motorcycle is a system. A real system.

To speak of certain government and establishment institutions as “the system” is to speak correctly, since these organizations are founded upon the same structural conceptual relationships as a motorcycle. They are sustained by structural relationships even when they have lost all other meaning and purpose. People arrive at a factory and perform a totally meaningless task from eight to five without question because the structure demands that it be that way. There’s no villain, no “mean guys’ who wants them to live meaningless lives, it’s just that the structure, the system demands it and no one is willing to take on the formidable task of changing the structure just because it is meaningless.

But to tear down a factory or to revolt against a government or to avoid repair of a motorcycle because it is a system is to attack effects rather than causes; and as long as the attack is upon effects only, no change is possible. The true system, the real system, is our present construction of systematic thought itself, rationality itself, and if a factory is torn down but the rationality which produced it is left standing, then that rationality will simply produce another factory. If a revolution destroys a systematic government, but the systematic patterns of thought that produced that government are left intact, then those patterns will repeat themselves in the succeeding government. There’s so much talk about the system. And so little understanding.

Still Ferrari-less

After all, there’s nothing quite like driving your Ferrari home to your 6,000 square foot mansion after a long, hard day of fighting for the cause. This is how Amy Bell ended a polemic (even by my standards) against exorbitant nonprofit executive compensation, published in Forbes in December as “ Nonprofit Millionaires”. That was rebutted today in Forbes, by Betsy Brill (President of Strategic Philanthropy) in “ Nonprofit CEOs Are Worth Every Dime”: > The _Chronicle _survey only reflects data from the 325 highest funded nonprofit organizations, and thus represents only .02% of the 1.5 million registered nonprofits operating in the U.S. To suggest that the 30 some nonprofit executives (among them hospital CEOs, NCAA coaches and university presidents) who were paid more than $1 million in 2008 represent the irresponsible management and greed of an entire sector might be laughable if it weren’t also potentially detrimental. By taking the survey data out of context, critics may cause donors to question–or even to pull back–their charitable giving at a time when nonprofits are struggling to meet an increased demand for services in the face of government cutbacks and dwindling private support. Bell’s criticism was published under “Philanthropy”, Brill’s response under “Intelligent Investing”.

Insufficient funds

Don’t be distracted by the vision; focus on the problem statement:

When the architects of our republic wrote the magnificent words of the Constitution and the Declaration of Independence, they were signing a promissory note to which every American was to fall heir. This note was a promise that all men, yes, black men as well as white men, would be guaranteed the “unalienable Rights” of “Life, Liberty and the pursuit of Happiness.” It is obvious today that America has defaulted on this promissory note, insofar as her citizens of color are concerned. Instead of honoring this sacred obligation, America has given the Negro people a bad check, a check which has come back marked “insufficient funds.”

From I have a Dream. Have a just Martin Luther King Jr. Day.

Social media is women’s work

Some new commentary on the evolving nature of women’s work, as a follow-up to comments on gender-driven compensation in social work:

….as the social media world becomes more and more female-driven (after all, social media power users tend to be female) will it become “demoted” in the tech industry, seen as a “soft” profession with lower comparative salaries and less room for professional advancement/leadership? Has that already happened?

via Rebecca

The poor, the dead, and God are easily forgotten

Peter Brown’s “Remembering the Poor and the Aesthetic of Society” (Journal of Interdisciplinary History) presents a wonderful analysis of charity through a lens of history and society:

Looking at the medieval and (largely) early modern societies described herein with more ancient eyes reveals patterns of expectations that are familiar from the longer history of the three major religions studied in this collection. First and foremost, those who founded and administered the charitable institutions of early modern Europe and the Middle East plainly carried in the back of their minds what might be called a particular “aesthetic of society,” the outlines of which might be blurred by the quotidien routines of administration. This “aesthetic of society” amounted to a sharp sense of what constituted a good society and what constituted an ugly society, namely, one that neglected the poor or treated them inappropriately.

Europeans and Ottomans alike instantly noticed when charitable institutions were absent. Of the great imarets of the Ottoman empire, Evliya the seventeenth-century traveler, wrote, “I, this poor one, have traveled 51 years and in the territories of 18 rulers, and there was nothing like our enviable institution.”

The article delves into comparisons of social norms of charity—of which I have quoted before:

Divided as European Protestants and Catholics were in their ideas about the good society, the differences between Christian Europe and the Ottoman Empire were even more decisive, subtle though they sometimes could be. Christian Europe concentrated on a quality of mercy that was essentially asymmetrical. It strove to integrate those who, otherwise, would have no place in society. As the founder of Christ’s Hospital wrote in the sixteenth century, “Christ has lain too long abroad . . . in the streets of London.” To him, those deserving of mercy were “lesser folk,” and those who “raised them up” were “like a God.” In Catholic countries, much charity was “redemptive,” directed to tainted groups who might yet come to be absorbed more fully into the Christian fold—including Jews, some of whom might yet be converted, and prostitutes, some of whom might yet be reformed. In the more bracing air of Protestant Hadleigh, however, “reform” meant making sure that those who were “badly governed in their bodies” (delinquent male beggars) were brought back to the labor force from which they had lapsed. For both Catholics and Protestants, the “reform” of errant groups was a dominant concern.

By contrast, in Ottoman society, receiving charity brought no shame. To go to an imaret was not to be “brought in from the cold.” Rich and poor were sustained by the carefully graded bounty of the sultan: “Hand in hand with the imperial generosity is that of a strictly run establishment, carefully regulating the movements of its clients and the sustenance each received.” The meals at the Ottoman imaret are reminiscent of the Roman convivium, great public banquets of the Roman emperors, in their judicious combination of hierarchy and outreach to all citizens. Nothing like it existed in Christian Europe.

So who cares? (This is always a good question to throw at the dewey-eyed young-ins):

One issue concerning the “aesthetic of society” that deserves to be stressed is often taken for granted in studies of poverty: Why should the poor matter in the first place? The heirs to centuries of concerted charitable effort by conscientious Jews, Christians, and Muslims are liable to forget that concern for the poor is, in many ways, a relatively recent development in the history of Europe and the Middle East, not necessarily shared by many non-European and non-Middle Eastern societies.

The Greco-Roman world had no place whatsoever for the poor in its “aesthetic of society.” But ancient Greeks and Romans were not thereby hardhearted or ungenerous. They were aware of the misery that surrounded them and often prepared to spend large sums on their fellows. But the beneficiaries of their acts of kindness were never deaned as “the poor,” largely because the city stood at the center of the social imagination. The misery that touched them most acutely was the potential misery of their city. If Leland Stanford had lived in ancient Greece or in ancient Rome, his philanthropic activities would not have been directed toward “humanity,” even less toward “the poor,” but toward im- proving the amenities of San Francisco and the aesthetics of the citizen body as a whole. It would not have gone to the homeless or to the reform of prostitutes. Those who happened, economically, to be poor might have benefited from such philanthropy, but only insofar as they were members of the city, the great man’s “fellow-citizens.”

The emergence of the poor as a separate category and object of concern within the general population involved a slow and hesitant revolution in the entire “aesthetic” of ancient society, which was connected primarily with the rise of Christianity in the Roman world. But it also coincided with profound modiacations in the image of the city itself. The self-image of a classical, city-bound society had to change before the “poor” became visible as a separate group within it.

Similarly, in the context of the Chinese empire’s governmental tradition, the victims of famine were not so much “the poor” as they were “subjects” who happened to need food, the better to be controlled and educated like everyone else. This state-centered image had to weaken considerably before Buddhist notions of “compassion” to “the poor” could spread in China. Until at least the eleventh century, acts of charity to the poor ranked low in the hierarchy of official values, dismissed as “little acts” and endowed with little public resonance. They were overshadowed by a robust state ideology of responsibility for famine relief, which put its trust, not on anything as frail as “compassion,” but on great state warehouses controlled (it was hoped) by public-spirited provincial governors.

If the phrase “aesthetic of society” connotes a view of the poor deemed fitting for a society, one implicit aspect of it notably absent from the ancient world and China was the intense feeling—shared by Jews, Christians, and Muslims—that outright neglect of the poor was ugly, and that charity was not only prudent but also beautiful. Despite the traditional limitations of charitable institu- tions—their perpetual shortfall in meeting widespread misery, their inward-looking quality, and the overbearing manner in which they frequently operated—they were undeniably worthwhile ventures. The officials who ran them and the rich who funded them could think of themselves as engaged in “a pro- foundly integrative activity.” This widespread feeling of contributing to a “beautiful” rather than an “ugly” society still needs to be explained.

Why remember the poor? There are many obvious answers to this question, most of which have been fully spelled out in recent scholarship. Jews, Christians, and Muslims were guardians of sacred scriptures that enjoined compassion for the poor and promised future rewards for it. Furthermore, in early modern Europe, in particular, charity to the poor came to mean more than merely pleasing God; it represented the solution to a pressing social problem. To provide for the poor and to police their movements was a prudent reaction to what scholars have revealed as an objective crisis caused by headlong demographic growth and a decline in the real value of wages.

Yet even this “objective” crisis had its “subjective” side. Contemporaries perceived the extent of the crisis in, say, Britain as amplified, subjectively, by a subtle change in the “aesthetic of society.” The poor had not only become more dangerous; their poverty had become, in itself, more shocking. As Wrightson recently showed, forms of poverty that had once been accepted as part of the human condition, about which little could be done, became much more challenging wherever larger sections of a community became accustomed to higher levels of comfort. When poverty could no longer be taken for granted, to overlook the poor appeared, increasingly, to be the mark of an “ugly” society. Moreover, that the potentially “forgettable” segments of society were usually articulate and well educated, able to plead their cause to their more hardhearted contemporaries, had something to do with how indecorous, if not cruel, forgetting them would be.

Paul’s injunction to “remember the poor” (Galatians 2:10) and its equivalents in Jewish and Muslim societies warned about far more than a lapse of memory. It pointed to a brutal act of social excision the reverberations of which would not be confined to the narrow corridor where rich and poor met through the working of charitable institutions. The charitable institutions of the time present the poor, primarily, as persons in search of elemental needs— food, clothing, and work. But hunger and exposure were only the “presenting symptoms” of a deeper misery. Put bluntly, the heart of the problem was that the poor were eminently forgettable persons. In many different ways, they lost access to the networks that had lodged them in the memory of their fellows. Lacking the support of family and neighbors, the poor were on their own, floating into the vast world of the unremembered. This slippage into oblivion is strikingly evident in Jewish Midrash of the book of Proverbs, in which statements on the need to respect the poor are attached to the need to respect the dead. Ultimately helpless, the dead also depended entirely on the capacity of others to remember them. The dead represented the furthest pole of oblivion toward which the poor already drifted.

Fortunately for the poor, however, Jews, Christians, and Muslims not only had the example of their own dead—whom it was both shameful and inhuman to forget—but also that of God Himself, who was invisible, at least for the time being. Of all the eminently forgettable persons who ringed the fringes of a medieval and early modern society, God was the one most liable to be for- gotten by comfortable and conadent worldlings. The Qur’an equated those who denied the Day of Judgment with those who rejected orphans and neglected the feeding of the poor (Ma’un 107:1–3). The pious person, by contrast, forgot neither relatives nor strangers who were impoverished. Even though he might have had every reason to wish that they had never existed, he went out of his way to “feed them . . . and to speak kindly to them” (Nisa’ 4.36, 86).

The poor challenged the memory like God. They were scarcely visible creatures who, nonetheless, should not be forgotten. As Michael Bonner shows, the poor, the masakin of the Qur’an and of its early medieval interpreters, are “unsettling, ambiguous [persons] . . . . whom we may or may not know.” In all three religions, charity to the easily forgotten poor was locked into an entire social pedagogy that supported the memory of a God who, also, was all-too-easily forgotten.

The poor were not the only persons in a medieval or an early modern society who might become victims of forgetfulness. Many other members of Jewish, Christian, and Islamic societies—and often the most vocal members—found themselves in a position strangely homologous to, or overlapping, that of the poor, and they often proved to be most articulate in pressing the claims of the poor. They also demanded to be remembered even if, by the normal standards of society, they did nothing particularly memorable.

Seen with the hard eyes of those who exercised real power in their societies, the religious leaders of all three religions were eminently “forgettable” persons. They contributed nothing of obvious importance to society.

And of course, I respect any scholar who manages to connect their paper to their ability to continue drawing a salary:

The manner in which a society remembers its forgettable persons and characterizes the failure to do so is a sensitive indicator of its tolerance for a certain amount of apparently unnecessary, even irrelevant, cultural and religious activity. What is at stake is more than generosity and compassion. It is the necessary heedlessness by which any complex society can and a place for the less conspicuous elements of its cultural differentiation and social health. Scholars owe much to the ancient injunction to “remember the poor.”

American Press Subsidies

A brief history of the United State’s subsidies to journalism and the press, from The Nation’s “How to Save Journalism” by John Nichols and Robert McChesney:

Even those sympathetic to subsidies do not grasp just how prevalent they have been in American history. From the days of Washington, Jefferson and Madison through those of Andrew Jackson to the mid-nineteenth century, enormous printing and postal subsidies were the order of the day. The need for them was rarely questioned, which is perhaps one reason they have been so easily overlooked. They were developed with the intention of expanding the quantity, quality and range of journalism–and they were astronomical by today’s standards. If, for example, the United States had devoted the same percentage of its GDP to journalism subsidies in 2009 as it did in the 1840s, we calculate that the allocation would have been $30 billion. In contrast, the federal subsidy last year for all of public broadcasting, not just journalism, was around $400 million.

The experience of America’s first century demonstrates that subsidies of the sort we suggest pose no threat to democratic discourse; in fact, they foster it. Postal subsidies historically applied to all newspapers, regardless of viewpoint. Printing subsidies were spread among all major parties and factions. Of course, some papers were rabidly partisan, even irresponsible. But serious historians of the era are unanimous in holding that the extraordinary and diverse print culture that resulted from these subsidies built a foundation for the growth and consolidation of American democracy. Subsidies made possible much of the abolitionist press that led the fight against slavery.

Our research suggests that press subsidies may well have been the second greatest expense of the federal budget of the early Republic, following the military. This commitment to nurturing and sustaining a free press was what was truly distinctive about America compared with European nations that had little press subsidy, fewer newspapers and magazines per capita, and far less democracy. This history was forgotten by the late nineteenth century, when commercial interests realized that newspaper publishing bankrolled by advertising was a goldmine, especially in monopolistic markets. Huge subsidies continued to the present, albeit at lower rates than during the first few generations of the Republic. But today’s direct and indirect subsidies–which include postal subsidies, business tax deductions for advertising, subsidies for journalism education, legal notices in papers, free monopoly licenses to scarce and lucrative radio and TV channels, and lax enforcement of anti-trust laws–have been pocketed by commercial interests even as they and their minions have lectured us on the importance of keeping the hands of government off the press. It was the hypocrisy of the current system–with subsidies and government policies made ostensibly in the public interest but actually carved out behind closed doors to benefit powerful commercial interests–that fueled the extraordinary growth of the media reform movement over the past decade.

Two tales of Island 94

The title promises two tales; both are actually the same story, told with different levels of detail and suspense. The first is from _The Earthquake that America Forgot _by Dr. David Steward and Dr. Ray Knox. (These authors have a whole series of books about the New Madrid earthquakes, all of which seem to include the tongue-in-cheek warranty above.)

One hundred miles south of the epicenter of the first great 8.6 quake was Island #94. Vicksburg, Mississippi is near that location today. It was known as “Stack Island” but also as “Crow’s Nest and “Rogue’s Rest.” It was inhabited by pirates. Crow’s Nest was well-suited for staging an ambush on boats that could be spotted approaching the island several miles away in both directions and because boats had to thread a long narrow channel called “Nine-Mile Reach” on one side of the island.

It just so happened that on Sunday night, December 15, a Captain Paul Sarpy from St. Louis had tied up his boat for the night on of the the north end of Island #94. He was accompanied by his crew and family.

In those days the river was too treacherous to ply by night. There were shifting sand bars and many snags and stumps that could damage, sink or capsize a boat. There was no U.S. Army Corps of Engineers, as we have today, to dredge the river, mark it with buoys and stabilize the channel with jetties and dikes. Neither were there any lights on the banks for pilots to sight in making their turns through the many bends of the river. River maps were also poor and unreliable. Zadok Cramer’s Navigator was the newest and the best, but it was only updated every year or two, while the river changed constantly. Therefore, it was the custom for boatman to tie up at night and travel only by daylight.

Captain Sarpy didn’t know about the pirates. When he landed, he and several crew members went ashore to get some exercise and stretch their legs. As they strolled through the trees, they came upon an encampment of pirates. Tehy had not been seen. Hiding where they stood, they listened, overhearing the pirates’ plans to ambush a boat with a valuable cargo and “a considerable sum of money” that was supposed to pass by any day, now, on its way from St. Louis to New Orleans. As they eavesdropped with intense concentration, crouching behind the bushes, they overheard a name. “Sarpy.” It was Sarpy’s boat they were after!

Slipping away without being discovered. Sam and his men went back to their boat. Late that night, under cover of darkness, they drifted around the island undetected by the pirates. They tied up downstream hust far enough to make a quick getaway when daylight came.

During the night strong vibrations shook their boat. At first they were afraid the pirates had found them and were boarding for plunder. But no one came aboard. The tremors continued, accompanied with the agitation of large waves that rocked their boat.

That morning, in the dim dawn light as the fog and mist began to clear, Sarpy’s sailors looked upstream. Island #94 had completely disappeared—pirates and all!

For a hundred years there was no Stack Island, no Island #94. Since then the river has redeposited another body of sand in that location which carries the same name and number today. But it is not the same isle where Captain Sarpy so narrowly escaped a brutal ambush and was saved by an earthquake.

Further to the north, at the end of Long Reach, near the present-day town of Osceola. was Island #32. You won’t find it on any modern navigation maps. There is an Island #31 and an Island #33 but no Island #32. It, too, was to disappear in the darkness of an early December morning—but not yet. As of December 16, 1811, Island #32 was still there, but its was days were numbered. In less than a week, it, too, would follow the fate of Island #94.

I suspect the previous story is quite close to the St. Louis Globe Democrat’s “The Last Night of Island Ninety-Four” which is mentioned in our next story, coming from Jay Feldman’s When the Mississippi Ran Backwards:

A tale published in the St. Louis Globe-Democrat in 1902 purported to tell the story of “The Last Night of Island Ninety-Four.” According to this account, on the evening December is, a Captain Sarpy was enroute from St. Louis to New Orleans in his keelboat, the Belle Heloise with his wife and daughter and a large sum of money. At nightfall, the keelboat tied up at Island 94. This island had been a long-standing lair for river denizens of every stripe, including Samuel Mason, the notorious river pirate who had been apprehended in Little Prairie a decade earlier, only to escape while being transported on the river. Two years before Sarpy’s trip, however, a force of 150 keelboatmen had invaded the island and cleaned out the den of thieves, after which the island became a safe haven, and now, Sarpy thought to use the island’s abandoned blockhouse to lodge his family and crew for the night.

As Sarpy and two of his men explored the island, however, they overheard talking in the blockhouse and, peering in the windows, listened as a group of fifteen river pirates discussed plans to fall upon the Belle Heloise the following morning. Sarpy and his crewmen hurried back to the boat and quietly pushed off, tying up at a hidden place in the willows on the west bank about a mile below Island 94.

The following morning, after weathering a night of earthquakes, Sarpy looked upstream to see that Island 94 had disintegrated—the entire landmass was gone, and presumably, its criminal inhabitants along with it.

Whether or not the story is true, Island 94 did indeed disappear.

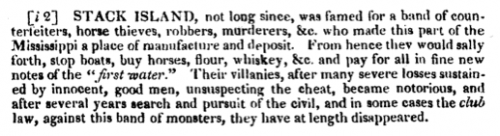

Notes of the first water

Above is from the addendum of Zadok Cramer’s The Navigator from which I have quoted previously. Written buoyantly, it makes jokes of specie (‘new notes of the “first water”’ refers to the breadth of bank notes available at the time) and law (“club law” is the lynch mob). The text:

[i2] STACK ISLAND, not long since, was famed for a band of counterfeiters, horse thieves, robbers, murderers, &c. who made this part of the Mississippi a place of manufacture and deposit. From hence they would sally forth, stop boats, buy horses, flour, whiskey, &c. and pay for all in fine new notes of the “first water.” Their villages, after many severe losses sustained by innocent, good men, unsuspecting the cheat, became notorious, and after several years search and pursuit of the civil, and in some cases the club law, against this band of monsters, they have at length disappeared.

Radical volunteerism, or not

From the NY Times:

Teach for America, a corps of recent college graduates who sign up to teach in some of the nation’s most troubled schools, has become a campus phenomenon, drawing huge numbers of applicants willing to commit two years of their lives.

But a new study has found that their dedication to improving society at large does not necessarily extend beyond their Teach for America service.

In areas like voting, charitable giving and civic engagement, graduates of the program lag behind those who were accepted but declined and those who dropped out before completing their two years, according to Doug McAdam, a sociologist at Stanford University, who conducted the study with a colleague, Cynthia Brandt.

The reasons for the lower rates of civic involvement, Professor McAdam said, include not only exhaustion and burnout, but also disillusionment with Teach for America’s approach to the issue of educational inequity, among other factors.

Third paragraph, “those who were accepted but declined”: that’s me.

Also, as someone who promotes the “service makes you a better citizen”-line, I am really intrigued to read this:

Professor McAdam’s findings that nearly all of Freedom Summer’s participants were still engaged in progressive activism when he tracked them down 20 years later have contributed to the widely held notion that civic advocacy and service among the young make for better citizens.

…

“Back in the ’60s, if you signed up for Freedom Summer, it was perceived to be countercultural,” said Professor Reich, who taught sixth grade in Houston as a member of the Teach for America corps. “But unlike doing Freedom Summer, joining Teach for America is part of climbing up the elite ladder — it’s part of joining the system, the meritocracy.”